AI Story Writing Versus Human Beings

I‘d be very interested to know whether any of these were AI journalism.

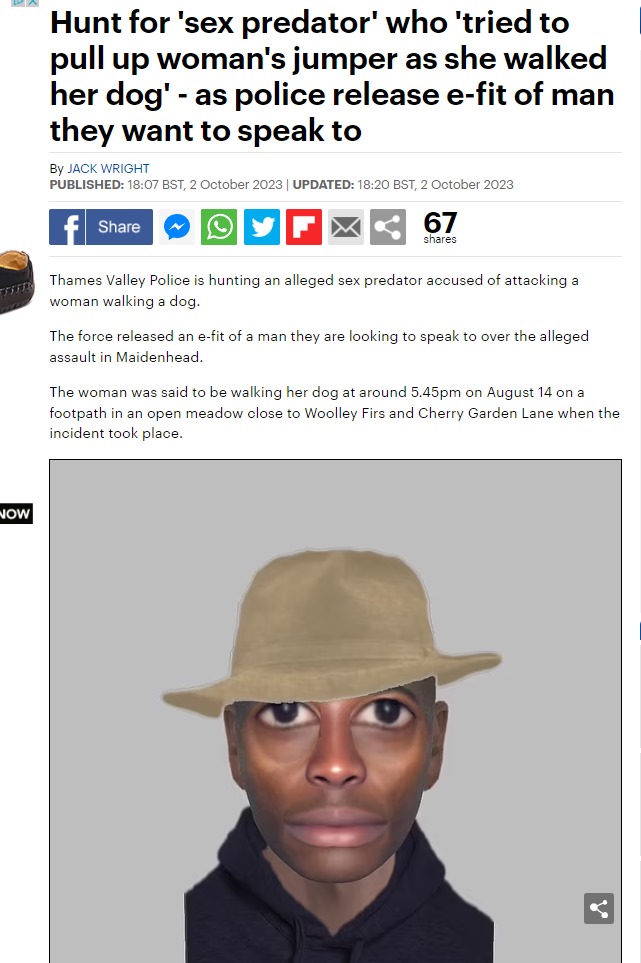

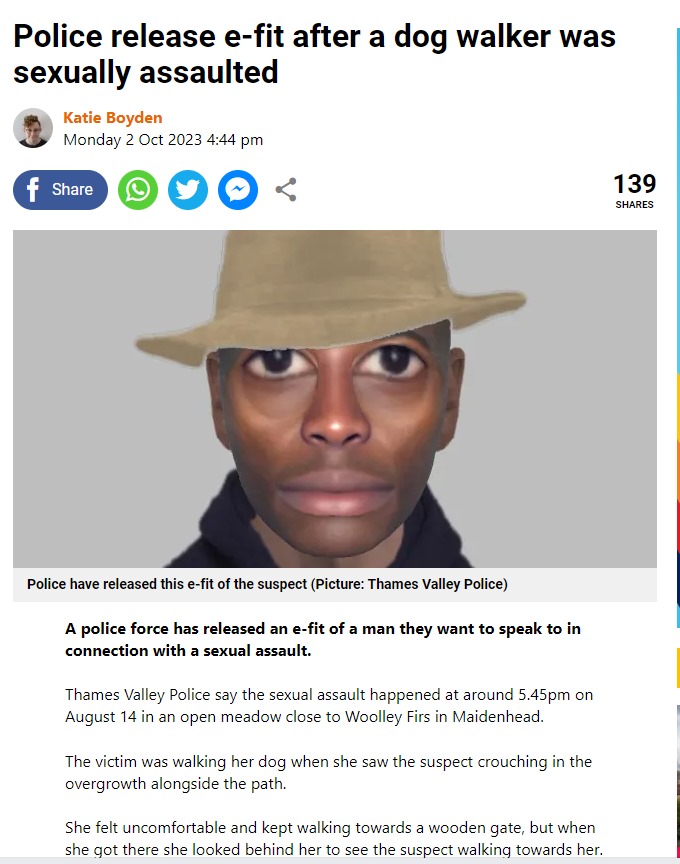

The first two reports by British national newspapers indicate that police have released artist’s impression of someone to question in relation to an attack.

In the first report it talks about the appeal by Thames valley police and has some of the details.

In the second report it does the same thing, giving details about the woman that had been walking the dog and saying: “Police release a-fit after a dog walker was sexually assaulted”.

Only in the case of the Daily Star, I believe, we can be fairly sure a human being was on the job, noting in their headline: “Police e-fit of ‘alien Crazy Frog’ man goes viral – ‘he won’t blend in anywhere’.”

No prizes for guessing which message went insanely viral on social media, and rather surprisingly given the quality of the artist’s impression, a suspect has been arrested.

Of course I don’t have any inside knowledge on how all three stories were put together. But when using AI, journalists often do little more than edit the text, whereas a journalist who is creating the story has to take the time to look at the pictures and videos, as well as read the source press release.

When you do that, you can’t help but notice exactly what real the story is, as the Daily Star reporter did.